Amazon Nvidia Titan X 12 Gb For Mac

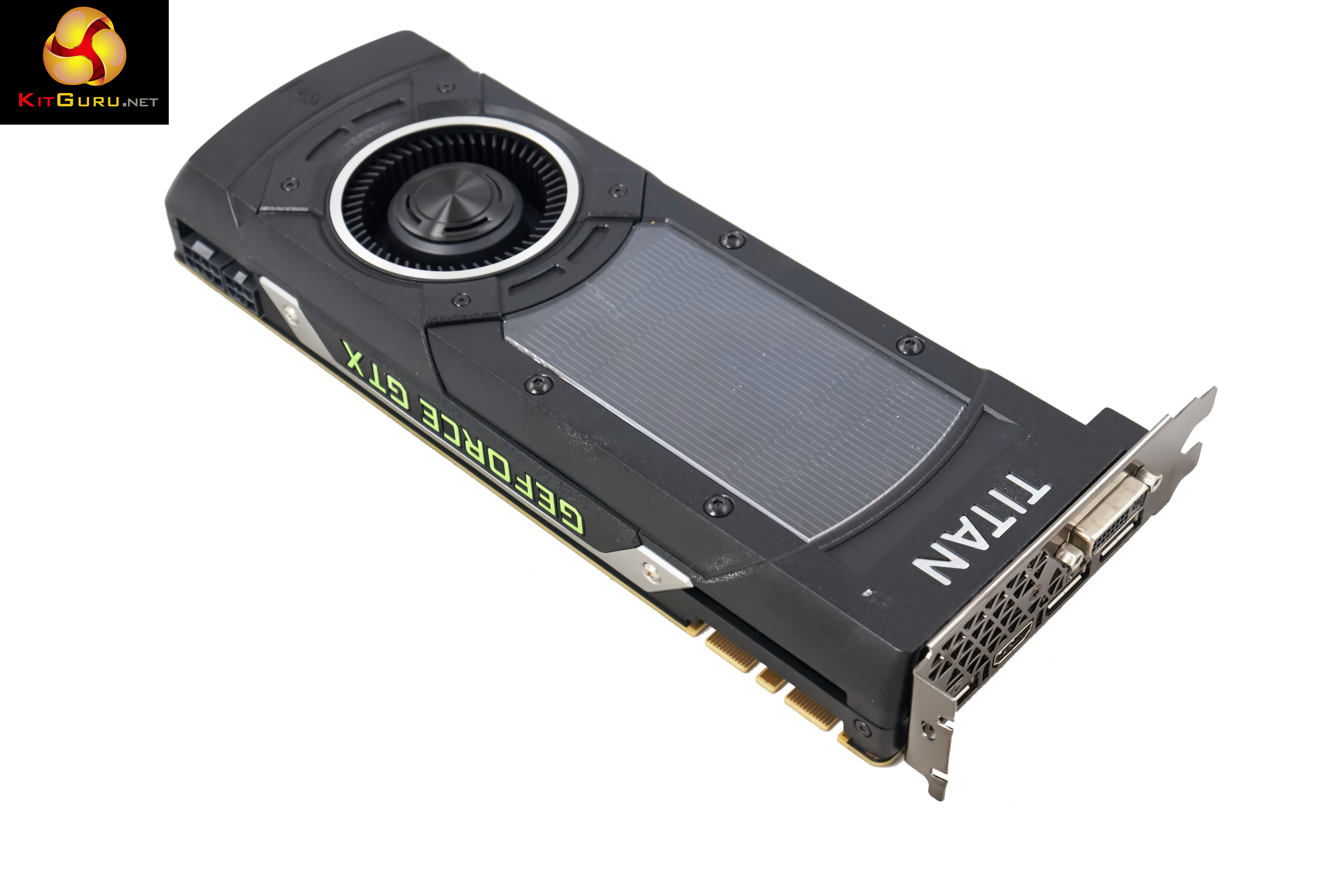

Introducing the Nvidia Titan X 12GB by MacVidCards. One of the fastest GPU’s available for the Mac Pro. Mac Store UK are the authorised distributor for MacVidCards as such this card boasts boot screen support from all ports and PCI Express 2.0 in Windows. The Titan X for Mac Pro is supplied with the correct power cables to run the card from the Mac Pro’s internal power supply. We strive to dispatch all items within 24 working hours of the payment clearing. If for any reason there is a delay to the dispatch of your item we will contact you. UK We ship all our items either by UPS or DPD to most geographical area of the UK.

This service is fully trackable and insured. A signature will be required. If nobody is available to sign for your item a advisory card will be left informing you how to get your item. We can also offer you enhanced delivery options, from timed weekday delivery to Saturday guaranteed. European Mainland UPS Standard or DPD Classic (3 – 5 working days) This option travels by road in Europe. Typically, the closer you are to the UK the sooner your item will arrive.

This service is fully trackable and insured. A signature is required for your item. If nobody is available to sign for the item the courier will leave an advisory card explaining the next steps to get you item.

UPS Express or DPD Air (1-2 working days) This option travels by Air in Europe. Depending on your location from major Airports will determine how long it will take to get to you.

This service is fully trackable and insured. A signature is required for your item. If nobody is available to sign for the item the courier will leave an advisory card explaining the next steps to get you item. A contact telephone number is required for this service. Rest Of the World UPS Express (2-5 working days) This is an airmail service.

This service is fully trackable and insured. A signature is required for your item. If nobody is available to sign for the item the courier will leave an advisory card explaining the next steps to get you item. A contact telephone number is required for this service.

Mac Store UK Unit 10 Regent House Princess Court Beam Heath Way Nantwich CW5 6PQ info@macstoreuk.com Your right of Cancellation The rights of cancellation set out below apply to any agreement between you and us save insofar as the agreement is in respect of computer software if it is unsealed by you. You have a right to cancel the agreement at any time before the expiry of a period of 30 working days beginning with the day after the day on which you receive the goods. You may cancel by giving us notice in any of the following ways: 1. By a notice in writing which you leave at our address (given above); 2. By a notice in writing which you send by post to our address (given above); 3. By electronic mail to our electronic mail address and the notice shall operate to cancel the agreement between us. If you cancel the agreement: 1.

You must request a returns number from us and then return the goods to us at the address given above; 2. If your item develops a fault within the first 30 days we will arrange for the collection of your item at our cost. You will need to take all reasonable steps to package the item securely and as you received it. We can then either offer you a full refund or an exchange. The goods must be returned to us at your cost (please note the definition of goods given above). The goods must be unused or unaltered. This includes downloading of software and alterations to hardware.

Goods must also be returned to us in the condition they were sold, i.e. Also used within the Manufacturers guidelines and not be subject to any damage.

You are under a duty to take reasonable care of the goods (including reusable packaging, manuals etc) until they are returned to us 5. You are under a duty to take reasonable care to see that they are received by us and not damaged in transit; 6. We will charge you the direct costs to us of recovering any goods supplied by us if you fail to return the goods to us.

Deep learning is a field with intense computational requirements and the choice of your GPU will fundamentally determine your deep learning experience. With no GPU this might look like months of waiting for an experiment to finish, or running an experiment for a day or more only to see that the chosen parameters were off and the model diverged. With a good, solid GPU, one can quickly iterate over designs and parameters of deep networks, and run experiments in days instead of months, hours instead of days, minutes instead of hours. So making the right choice when it comes to buying a GPU is critical.

So how do you select the GPU which is right for you? This blog post will delve into that question and will lend you advice which will help you to make a choice that is right for you.

Having a fast GPU is a very important aspect when one begins to learn deep learning as this allows for rapid gain in practical experience which is key to building the expertise with which you will be able to apply deep learning to new problems. Without this rapid feedback, it just takes too much time to learn from one’s mistakes and it can be discouraging and frustrating to go on with deep learning. With GPUs, I quickly learned how to apply deep learning on a range of Kaggle competitions and I managed to earn second place in the Partly Sunny with a Chance of Hashtags Kaggle competition using, where it was the task to predict weather ratings for a given tweet. In the competition, I used a rather large two layered deep neural network with rectified linear units and dropout for regularization and this deep net fitted barely into my 6GB GPU memory. The GTX Titan GPUs that powered me in the competition were a main factor of me reaching 2nd place in the competition.

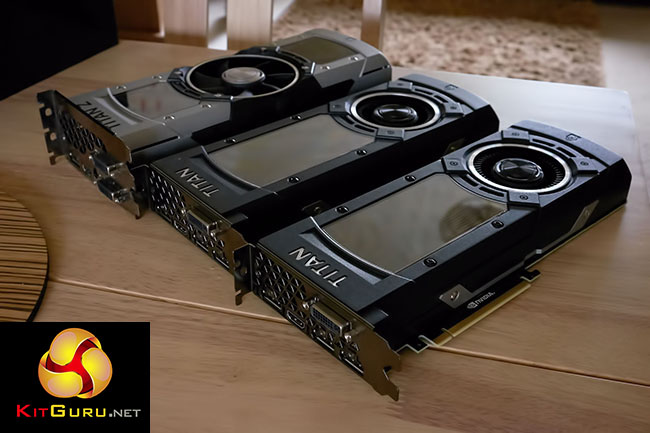

Should I get multiple GPUs? Excited by what deep learning can do with GPUs I plunged myself into multi-GPU territory by assembling a small GPU cluster with InfiniBand 40Gbit/s interconnect. I was thrilled to see if even better results can be obtained with multiple GPUs. I quickly found that it is not only very difficult to parallelize neural networks on multiple GPUs efficiently, but also that the speedup was only mediocre for dense neural networks.

Small neural networks could be parallelized rather efficiently using data parallelism, but larger neural networks like I used in the Partly Sunny with a Chance of Hashtags Kaggle competition received almost no speedup. I analyzed parallelization in deep learning in depth, developed a technique to increase the speedups in GPU clusters from 23x to 50x for a system of 96 GPUs and published at ICLR 2016. In my analysis, I also found that convolution and recurrent networks are rather easy to parallelize, especially if you use only one computer or 4 GPUs.

So while modern tools are not highly optimized for parallelism you can still attain good speedups. Figure 1: Setup in my main computer: You can see three GPUs and an InfiniBand card. Is this a good setup for doing deep learning? The user experience of using parallelization techniques in the most popular frameworks is also pretty good now compared to three years ago. Their algorithms are rather naive and will not scale to GPU clusters, but they deliver good performance for up to 4 GPUs. For convolution, you can expect a speedup of 1.9x/2.8x/3.5x for 2/3/4 GPUs; for recurrent networks, the sequence length is the most important parameters and for common NLP problems one can expect similar or slightly worse speedups then convolutional networks. Fully connect networks usually have poor performance for data parallelism and more advanced algorithms are necessary to accelerate these parts of the network.

So today using multiple GPUs can make training much more convenient due to the increased speed and if you have the money for it multiple GPUs make a lot of sense. Using Multiple GPUs Without Parallelism Another advantage of using multiple GPUs, even if you do not parallelize algorithms, is that you can run multiple algorithms or experiments separately on each GPU.

You gain no speedups, but you get more information about your performance by using different algorithms or parameters at once. This is highly useful if your main goal is to gain deep learning experience as quickly as possible and also it is very useful for researchers, who want try multiple versions of a new algorithm at the same time. This is psychologically important if you want to learn deep learning.

The shorter the intervals for performing a task and receiving feedback for that task, the better the brain able to integrate relevant memory pieces for that task into a coherent picture. If you train two convolutional nets on separate GPUs on small datasets you will more quickly get a feel for what is important to perform well; you will more readily be able to detect patterns in the cross-validation error and interpret them correctly. You will be able to detect patterns which give you hints on what parameter or layer needs to be added, removed, or adjusted. I personally think using multiple GPUs in this way is more useful as one can quickly search for a good configuration. Once one has found a good range of parameters or architectures one can then use parallelism across multiple GPUs to train the final network.

So overall, one can say that one GPU should be sufficient for almost any task but that multiple GPUs are becoming more and more important to accelerate your deep learning models. Multiple cheap GPUs are also excellent if you want to learn deep learning quickly. I personally have rather many small GPUs than one big one, even for my research experiments.

NVIDIA vs AMD vs Intel vs Google vs Amazon NVIDIA: The Leader NVIDIA’s standard libraries made it very easy to establish the first deep learning libraries in CUDA, while there were no such powerful standard libraries for AMD’s OpenCL. This early advantage combined with strong community support from NVIDIA increased the size of the CUDA community rapidly. This means if you use NVIDIA GPUs you will easily find support if something goes wrong, you will find support and advice if you do program CUDA yourself, and you will find that most deep learning libraries have the best support for NVIDIA GPUs. This is a very strong point for NVIDIA GPUs. On the other hand, NVIDIA has now a policy that the use of CUDA in data centers is only allowed for Tesla GPUs and not GTX or RTX cards. It is not clear what is meant by “data centers” but this means that organizations and universities are often forced to buy the expensive and cost-inefficient Tesla GPUs due to fear of legal issues. However, Tesla cards have no real advantage over GTX and RTX cards and cost up to 10 times as much.

That NVIDIA can just do this without any major hurdles shows the power of their monopoly — they can do as they please and we have to accept the terms. If you pick the major advantages that NVIDIA GPUs have in terms of community and support, you will also need to accept that you can be pushed around by them at will. AMD: Powerful But Lacking Support via unifies NVIDIA and AMD GPUs under a common programming language which is compiled into the respective GPU language before it is compiled to GPU assembly.

If we would have all our GPU code in HIP this would be a major milestone, but this is rather difficult because it is difficult to port the TensorFlow and PyTorch code bases. TensorFlow has some support for AMD GPUs and all major networks can be run on AMD GPUs, but if you want to develop new networks some details might be missing which could prevent you from implementing what you need. The ROCm community is also not too large and thus it is not straightforward to fix issues quickly. There also does not seem to be much money allocated for deep learning development and support from AMD’s side which slows the momentum. However, AMD GPUs compared to NVIDIA GPUs and the next AMD GPU the will be a computing powerhouse which will feature Tensor-Core-like compute units. Overall I think I still cannot give a clear recommendation for AMD GPUs for ordinary users that just want their GPUs to work smoothly.

In advance of National Novel Writing Month, Jason Snell shows you various Mac tools for. I'm a big proponent of it as a way to unlock one's creativity and give those of us who. We've also reviewed most of the Mac writing software out there.  Jun 4, 2018 - The best writing app for Mac, iPad, and iPhone is Ulysses. That a pro writing app provide a distraction-free user interface that spurs creativity. Word processors are very powerful programs that have a lot of unnecessary.

Jun 4, 2018 - The best writing app for Mac, iPad, and iPhone is Ulysses. That a pro writing app provide a distraction-free user interface that spurs creativity. Word processors are very powerful programs that have a lot of unnecessary.

More experienced users should have fewer problems and by supporting AMD GPUs and ROCm/HIP developers they contribute to the combat against the monopoly position of NVIDIA as this will greatly benefit everyone in the long-term. If you are a GPU developer and want to make important contributions to GPU computing, then an AMD GPU might be the best way to make a good impact over the long-term. For everyone else, NVIDIA GPUs might be the safer choice. There is a GT 750M version with DDR3 memory and GDDR5 memory; the GDDR5 memory will be about thrice as fast as the DDR3 version. With a GDDR5 model you probably will run three to four times slower than typical desktop GPUs but you should see a good speedup of 5-8x over a desktop CPU as well.

So a GDDR5 750M will be sufficient for running most deep learning models. If you have the DDR3 version, then it might be too slow for deep learning (smaller models might take a day; larger models a week or so). Sometime it is good, but often it isn’t – it depends on the use-case. One applications of GPUs for hash generation is bitcoin mining. However the main measure of success in bitcoin mining (and cryptocurrency mining in general) is to generate as many hashes per watt of energy; GPUs are in the mid-field here, beating CPUs but are beaten by FPGA and other low-energy hardware. In the case of keypair generation, e.g.

In mapreduce, you often do little computation, but lots of IO operations so that GPUs cannot be utilized efficiently. For many applications GPUs are significantly faster in one case, but not in another similar case, e.g. For some but not all regular expressions, and this is the main problem why GPUs are not used in other cases.

Thank you for the great post. Could you say something about having a new card on order CPU? For example I have 4 core Intel Q6600 from year 2007 with 8Gb of RAM (without possibility to upgrade). Could this be a bottleneck, if I choose to buy new GPU for CUDA and ML?

I’m also not sure which one is a better choice GTX 780 2Gb of RAM, vs GTX 970 4Gb of RAM. 780 has more cores, but are a bit slower A nice list of characteristics, still, I’m not sure which would be a better choice. I would use the GPU for all kind of problems, perhaps some with smaller networks, but I wouldn’t be shy of trying something bigger when I feel conferrable enough. What would you recommend? Hi enedene, thanks for your question!

Your CPU should be sufficient and should slow you down only slightly (1-10%). My post is now a bit outdated as the new Maxwell GPUs have been released. The Maxwell architecture is much better than the Kepler architecture and so the GTX 970 is faster than the GTX 780 even though it has lower bandwidth. So I would recommend getting a GTX 970 over a GTX 780 (of course, a GTX 980 would be better still, but a GTX 970 will be fine for most things, even for larger nets).

For low budgets I would still recommend a GTX 580 from eBay. I will update my post next week to reflect the new information. I have only superficial experience with the most libraries, as I usually used my own implementations (which I adjusted from problem to problem). However, from what I know, Torch7 is a really strong for non-image data, but you will need to learn some lua to adjust some things here and there.

I think pylearn2 is also a good candidate for non-image data, but if you are not used to theano then you will need some time to learn how to use it in the first place. Libraries like deepnet – which is programmed on top of cudamat – are much easier to use for non-image data, but the available algorithms are partially outdated and some algorithms are not available at all. I think you always have to change a few things in order to make it work for new data and so you might also want to check out libraries like caffe and see if you like the API better than other libraries. A neater API might outweigh the costs for needing to change stuff to make it work in the first place. So the best advice might be just to look a documentations and examples, try a few libraries, and then settle for something you like and can work with. Thanks for your comment Monica. This is indeed something I overlooked, which is actually a quite important issue when selecting a GPU.

I hope to address this in an update I aim to write soon. To answer your question: The increase memory usage stems from memory that is allocated during the computation of the convolutions to increase computational efficiency: Because image patches overlap one saves a lot of computation when one saves some of the image values to then reused them for an overlapping image patch. Albeit at a cost of device memory, one can achieve tremendous increases in computational efficiency when one does cleverly as Alex does in his CUDA kernels. Other solutions that use fast Fourier transforms (FFTs) are said to be even faster than Alex’s implementation, but these do need even more memory. If you are aiming to train large convolutional nets, then a good option might be to get a normal GTX Titan from eBay.

If you use convolutional nets heavily, two, or even four GTX 980 (much faster than a Titan) also make sense if you plan to use the convnet2 library which supports dual GPU training. However, be aware that NVIDIA might soon release a Maxwell GTX Titan equivalent which would be much better than the GTX 980 for this application. Hi, I am planning to replicate ImageNet object identification problem using CNNs as published in recent paper by G.

Hinton et al ( just as an exercise to learn about deep learning and CNNs ). What GPU would you recommend considering I am student. I heard the original paper used 2 GTX 580 and yet took a week to train the 7 layer deep network? Is this true?

Could the same be done using a single GTX 580 or GTX 970? How much time will it take to train the same on a GTX 970 or a single GTX 580? ( A week of time is okay for me ) 2. What kind of modifications in the original implementation could I do ( like 5 or 6 hidden layers instead of 7, or lesser number of objects to detect etc. ), to make this little project of mine easier to implement on a lower budget while at the same time helping me learn about the deep nets and CNNs? What kind of libraries would you recommend for the same?

Torch7 or pylearn2 / theano ( I am fairly proficient in python but not so much in lua ). Is there a small scale implementation of this anywhere in github etc?

Also thanks a lot for the wonderful post. All GPUs with 4 GB should be able to run the network; you can run a bit smaller networks on one GTX 580; these networks will always take more than 5 days, even on the fastest GPUs 2. Read about convolutional neural networks, then you will understand what the layers do and how you can use them. This is a good, thorough tutorial: 3. I would try pylearn2, convnet2, and caffe and pick which suits you best 4. The implementations are generally general implementations, i.e.

You run small and large networks with the same code, it is only a difference in a parameters to a function; if you mean by “small”, a less complex API I heard good things about the Lasagne library. It looks like it is vertical, but it is not. I took that picture while my computer was laying on the ground. However, I use the Cooler Master HAF X for both of my computer. I bought this tower because it has a dedicated large fan for the GPU slot – in retrospect I am unsure if the fan is helping that much. There is another tower I saw that actually has vertical slots, but again I am unsure if that helps so much. I would probably opt for liquid cooling for my next system.

It is more difficult to maintain, but has much better performance. With liquid cooling almost any case would go that fits the mainboard and GPUs.

Great article! You did not talk about the number of cores present in a graphics card (CUDA cores in case of nVidia).

My perception was that a card with more cores will always be better because more number of cores will lead to a better parallelism, hence the training might be faster, given that the memory is enough. Plz correct me if my understanding is wrong. Which card would you suggest for RNNs and a data size of 15-20 Gb (wikipedia/freebase size)?

A 960 would be good enough? Or should I go with a 970 one?

580 is not available in my country. Thanks for your comment. CUDA cores relate more closely to FLOPS and not to bandwidth, but it is the bandwidth that you want for deep learning.

So cuda cores are a bad proxy for performance in deep learning. What you really want is a high memory bus width (e.g. 384 bits) and high memory clock (e.g.

7000MHz) – anything other than that hardly matters for deep learning. Mat Kelcey did some tests with theano for the GTX 970 and it seems that the GPU has no memory problems for compute – so the GTX 970 might be a good choice then. Hi Yakup, I wanted to write a blog post with detailed advice about this topic sometimes in the next two weeks and if you can wait for that you might get some insights what hardware is right for you. But I also want to give you some general, less specific advice. If you might be getting more GPUs in the future, it is better that you will buy a motherboard with PCIe 3.0 and 7 PCIx16 slots (one GPU takes typically two slots). If you will use only 1-2 GPUs, then almost any motherboard with do (PCIe 2.0 would be also be okay). Plan to get a power supply unit (PSU) which has enough Watts to power all GPUs you will get in the future (e.g.

If you will get a maximum of 4, then buy a +1400 Watts PSU). The CPU does not need to be fast or have many cores.

Twice as many threads as you have GPUs is almost always sufficient (for Intel CPUs we mostly have: 1 core = 2 threads); any CPU with more than 3GHz is okay; less than 3GHz might give you a tiny penalty in speed of about 1-3%. Fast memory caches are often more important for CPUs, but in the big picture they also contribute little in overall performance; a typical CPU with slow memory will decrease the overall performance by a few percent. One can work around a small RAM by loading data sequentially from your hard drive into your RAM, but it is often more convenient to have a larger RAM; two times the RAM your GPU has gives you more freedom and flexibility (i.e. 8GB RAM for a GTX 980). A SSD will it make more comfortable to work, but similarly to the CPU offers little performance gains (0-2%; depends on the software implementation); a SSD is nice if you need to preprocess large amounts of data and save them into smaller batches, e.g. Preprocessing 200GB of data and save them into batches of 2GB is a situation in which SSDs can save a lot of time.

If you decide to get a SSD, a good rule might be to buy a SSD that is twice as large as your largest data set. If you get a SSD, you should also get a large hard drive where you can move old data sets to.

So the bottom line is, a $1000 system should perform at least at 95% of a $2000 system; but a $2000 system offers more convenience and might save some time for preprocessing. Thanks for your comment, Dewan.

An AMD CPU is just as good as a Intel CPU; in fact I might favor AMD over Intel CPUs because Intel CPU pack just too much unnecessary punch – one simply does not need so much processing power as all the computation is done by the GPU. The CPU is only used to transfer data to the GPU and to start kernels (which is little more than a function call). Transferring data means that the CPU should have a high memory clock and a memory controller with many channels. This is often not advertised on CPUs as it not so relevant for ordinary computation, but you want to choose the CPU with the larger memory bandwidth (memory clock times memory controller channels).

The clock on the processor itself is less relevant here. A 4GHz 8 core AMD CPU might be a bit overkill. You could definitely settle for less without any degradation in performance. But what you say about PCIe 3.0 support is important (some new Haswell CPUs do not support 40 lanes, but only 32; I think most AMD CPUs support all 40 lanes). As I wrote above I will write a more detailed analysis in a week or two. Thanks for the great post! I have one question, however: I’m in the “started DL, serious about it” group and have a decent PC already, although without NVIDIA GPU.

I’m also a 1st yr student, so GTX 980 is out of question 😉 The question is: what do You think about Amazon EC2? I could easily buy a GTX580, but I’m not sure if it’s the best way to spend my money. And when I think about more expensive cards (like 980 or the ones to be released in 2016) it seems like running a spot instance for 10 cents per hour is a much better choice. What could be the main drawbacks of doing DL on EC2 instead of my own hardware? Hi Tim, I am a bit confused between buying your recommended GTX 580 and a new GTX 750 (maxwell). The models which I am getting in ebay are around 120 USD but they are 1.5GB models.

One big problem with the 580 would be, buying a new PSU (500watt). As you stated the maxwell architecture is the best, then would the GTX 750 (512 CUDA cores, 1GB DDR5) be a good choice? It will be about 95 USD and I can also do without an expensive PSU. My research area is mainly in text mining and nlp, not much of images. Other than this I would do Kaggle competetions.

Any comments on this new Maxwell architecture Titan X? $1000 US seemingly has a massive memory bandwidth bump – for example the gtx 980 specs claim 224 GB/sec with the Maxwell architecture, this has 336 GB/sec (and also comes stock with 12GB VRAM!) Along that line, are the memory bandwith specs not apples to apples comparisons across different Nvidia architectures? The also 780ti claims 336GB/sec with the Kepler architecture – but you claim the 980 with 224GB/sec bandwidth can out benchmark it for basic neural net activities? Appreciate this post. You can compare bandwidth within microarchitecture (Maxwell: GTX Titan X vs GTX 980, or Kepler: GTX 680 vs GTX 780), but across architectures you cannot do that (Maxwell card X vs Kepler card X). The very minute changes in the design of a microchip can make vast difference in bandwidth, FLOPS, or FLOPS/watt.

Amazon Nvidia Titan X 12 Gb For Mac Free

Kepler was about FLOPS/watt and double precision performance for scientific computing (engineering, simulation etc.), but the complex design lead to poor utilization of the bandwidth (memory bus times memory clock). With Maxwell the NVIDIA engineers developed an architecture which has both energy efficiency and good bandwidth utilization, but the double precision suffered in turn — you just cannot everything.

Thus Maxwell cards make great gaming and deep learning cards, but poor cards for scientific computing. The GTX Titan X is so fast, because it has a very large memory bus width (384 bit), an efficient architecture (Maxwell) and a high memory clock rate (7 Ghz) — and all this in one piece of hardware. This is a good point, Alex. I think you can also get very good results with conv nets that feature less memory intensive architectures, but the field of deep learning is moving so fast, that 6 GB might soon be insufficient.

Right now, I think one has still quite a bit of freedom with 6 GB of memory. A batch and activation unload/load procedure would be limited by the 8GB/s bandwidth between GPU and CPU, so there will be definitely a decrease in performance if you unload/load a majority of the needed activation values. Because the bandwidth bottlenecks are very similar to parallelism, one can expect a decrease in performance of about 10-30% if you unload/load the whole net. So this would be an acceptable procedure for very large conv nets, however smaller nets with less parameters would still be more practical I think. EVGA cards often have many extra features (dual BIOs, extra fan design) and a bit higher clock and/or memory, but their cards are more expensive too.

However, with respect to price/performance it often depends from card to card which is the best one and one cannot make general conclusions from a brand. Overall, the fan design is often more important than the clock rates and extra features. The best way to determine the best brand, is often to look for references of how hot one card runs compared to another and then think if the price difference justifies the extra money. Most often though, one brand will be just as the next and the performance gains will be negligible — so going for the cheapest brand is a good strategy in most cases. Hey Tim, you been a big help – I have included the results from CUDA bandwidth test (which is included in the samples file of the basic CUDA install.) This is for a GTX 980 running on 64bit linux with i3770 CPU, and PCIe 2.0 lanes on motherboard.

This look reasonable? Are they indicative of anything? The device/host and host/device speeds are typically the bottleneck you speak of? Hi Tim, great post! I feel lucky that I chose a 580 a couple of years ago when I started experimenting with neural nets. If there had been an article like this then I wouldn’t have been so nervous!

I’m wondering if you have any quick tips for fresh Ubuntu installs with current nvidia cards? When I got my used system running a couple of years ago it took quite a while and I fought with drivers, my 580 wasn’t recognized, etc.

On the table next to me is a brand new build that I just put together that I’m hoping will streamline my ML work. It’s an intel X99 system with a Titan X (I bought into the hype!). Windows went on fine(although I will rarely use it) and Ubuntu will go on shortly. I’m not looking forward to wrestling with driversso any tips would be greatly appreciated. If you have a cheat-sheet or want to do a post, I’m sure it will be warmly welcomed by manyespecially me!